regularization machine learning mastery

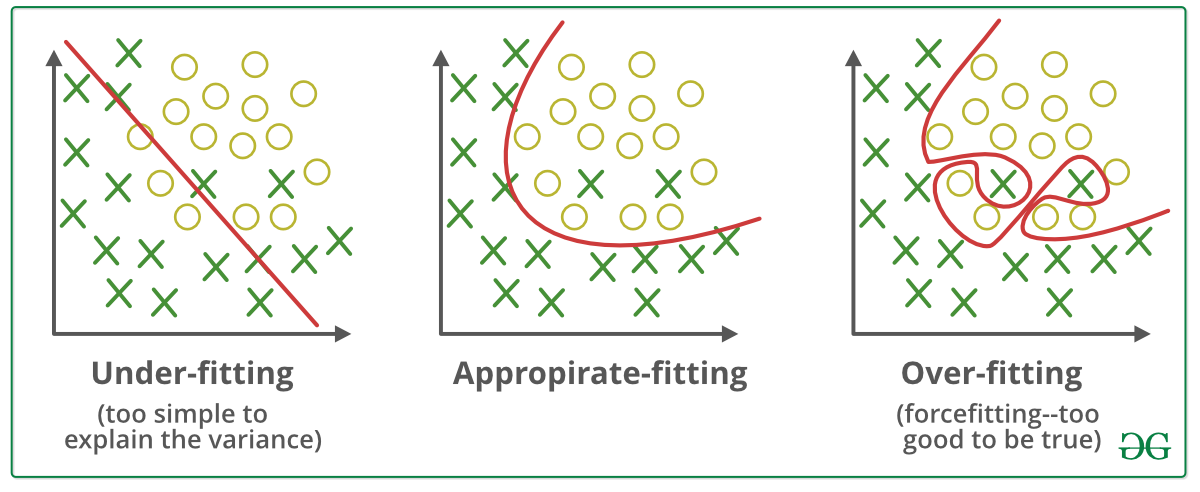

A Simple Way to Prevent Neural Networks from Overfitting download the PDF. Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small.

How To Improve Performance With Transfer Learning For Deep Learning Neural Networks

Regularization is used in machine learning as a solution to overfitting by reducing the variance of the ML model under consideration.

. One of the major aspects of training your machine learning model is avoiding overfitting. You should be redirected automatically to target URL. Dropout is a technique where randomly selected neurons are ignored during training.

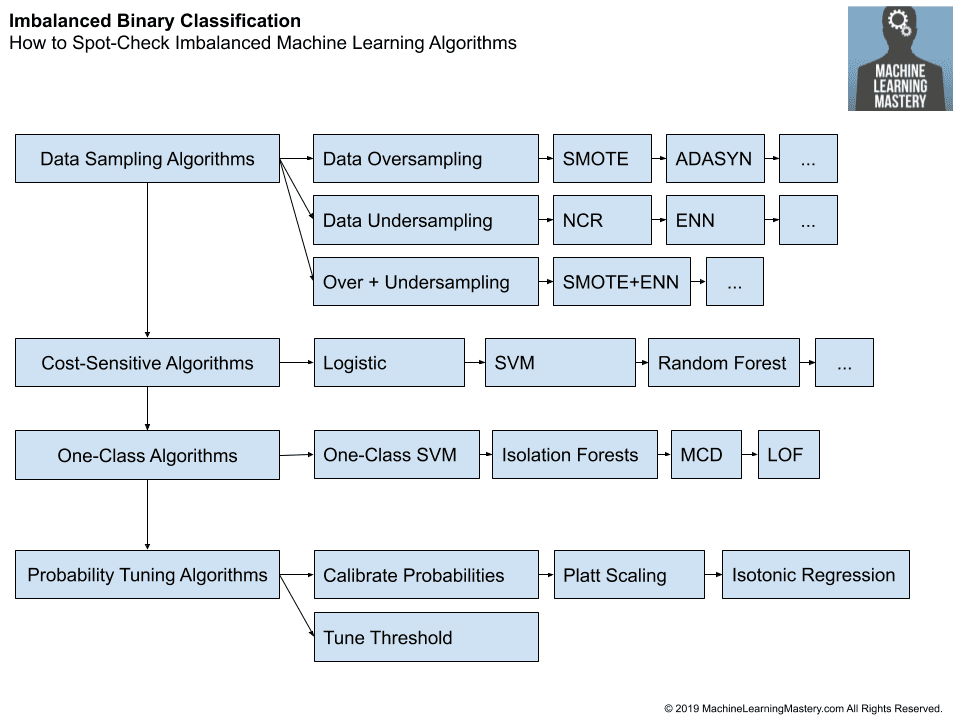

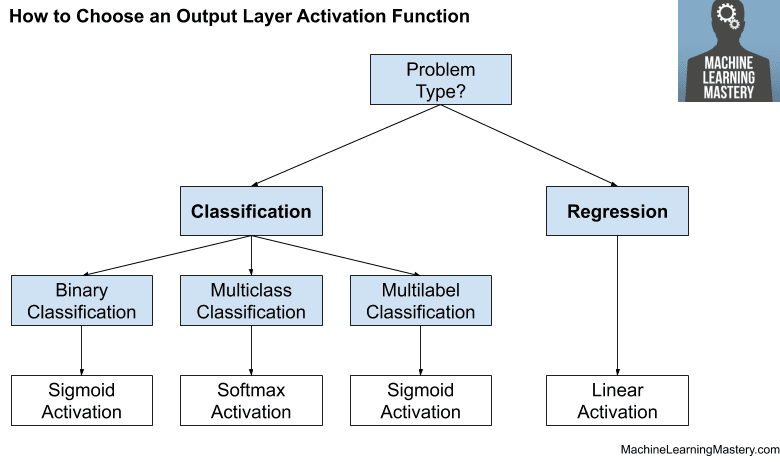

Regularization can be implemented in multiple ways by either modifying the loss function sampling method or the training approach itself. By noise we mean the data points that dont really represent. This penalty controls the model complexity - larger penalties equal simpler models.

Regularization in Machine Learning. In the included regularization_ridgepy file the code that adds ridge regression is. Machine learning involves equipping computers to perform specific tasks without explicit instructions.

The general form of a regularization problem is. In their 2014 paper Dropout. Regularization Terms by Göktuğ Güvercin.

Regularization is any modification we make to a learning algorithm that is intended to reduce its generalization error but not its training error If. Let us understand this concept in detail. Regularization is one of the most important concepts of machine learning.

The answer is regularization. You should be redirected automatically to target URL. While optimization algorithms try to reach global minimum point on loss curve they actually decrease the value of first term in those loss functions that is summation part.

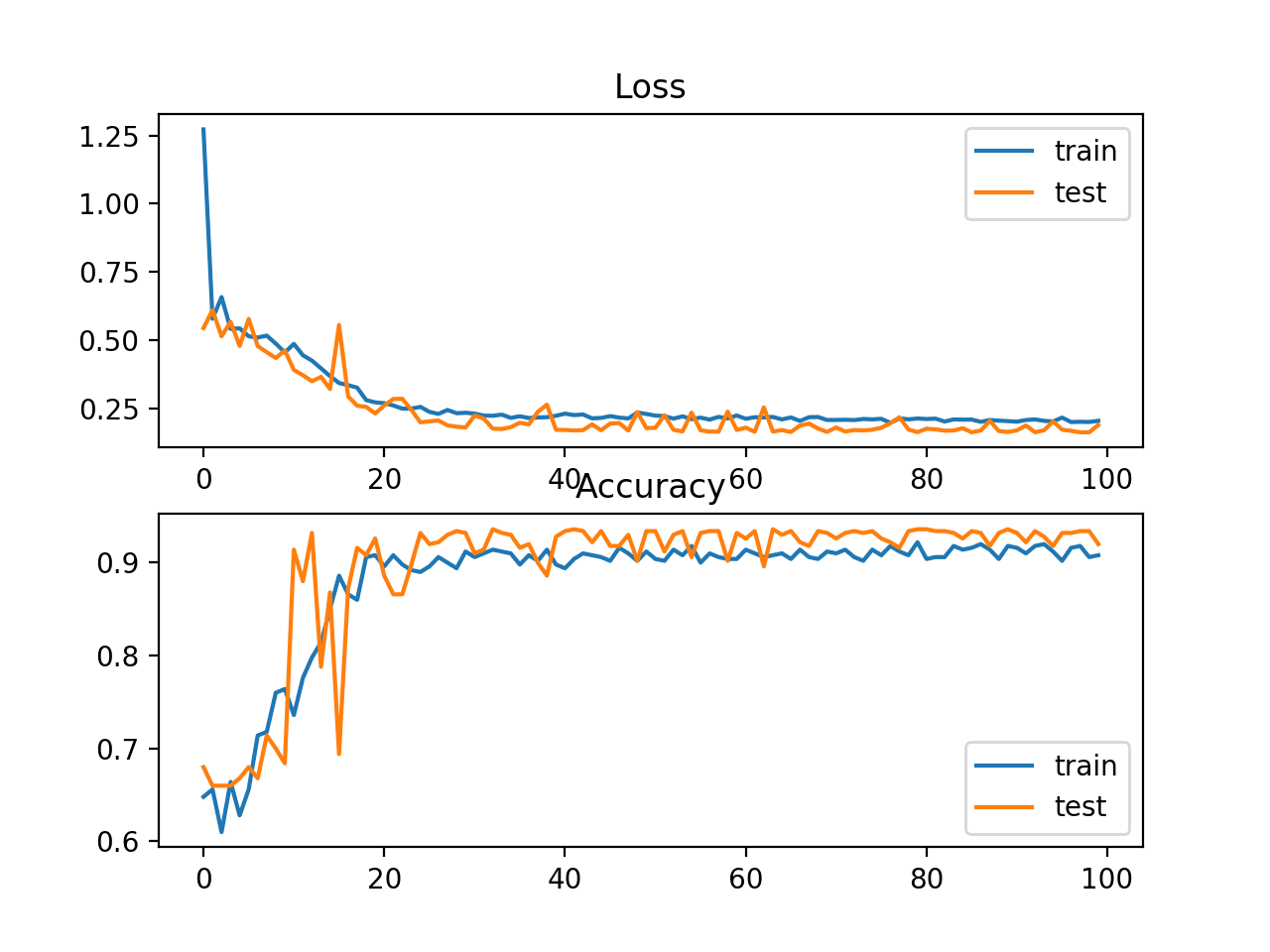

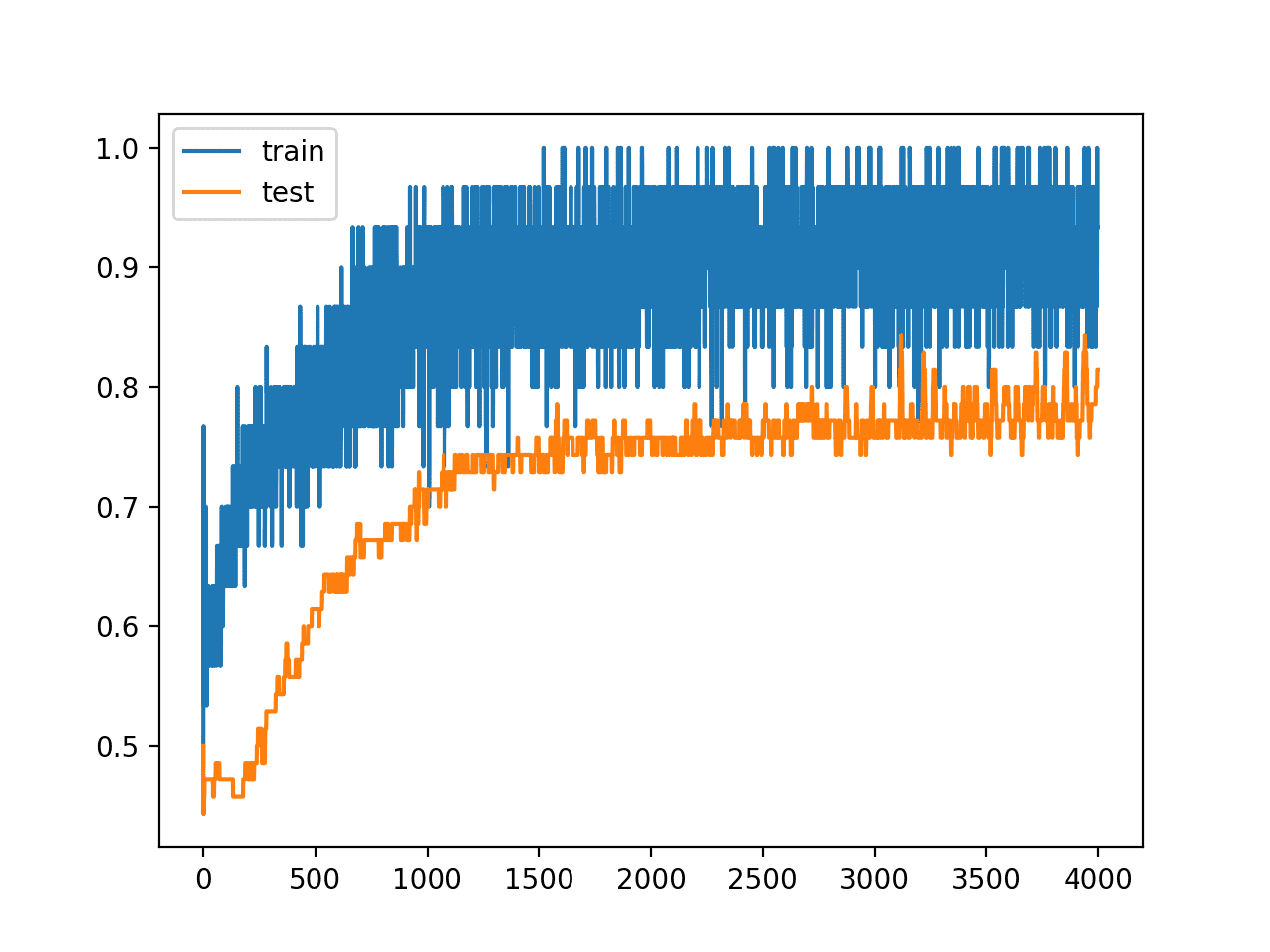

The model will have a low accuracy if it is overfitting. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. It will probably do a better job against future data.

Data scientists typically use regularization in machine learning to tune their models in the training process. For Linear Regression line lets consider two points that are on the line Loss 0 considering the two points on the line λ 1. This allows the model to not overfit the data and follows Occams razor.

Cost function Loss λ xw2. Adding the Ridge regression is as simple as adding an additional. It means the model is not able to.

In machine learning regularization problems impose an additional penalty on the cost function. Data augmentation and early stopping. However at the same time the length of the weight vector tends to increase.

The cheat sheet below summarizes different regularization methods. Dropout Regularization For Neural Networks. It is a technique to prevent the model from overfitting by adding extra information to it.

Consider the graph illustrated below which represents Linear regression. So the systems are programmed to learn and improve from experience automatically. Dropout is a regularization technique for neural network models proposed by Srivastava et al.

Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small. Also it enhances the performance of models. Then Cost function 0 1 x 142.

Hence the value of regularization terms rises. Regularization can be splinted into two buckets. This happens because your model is trying too hard to capture the noise in your training dataset.

The simple model is usually the most correct. Regularization in Machine Learning What is Regularization. In Figure 4 the black line represents a model without Ridge regression applied and the red line represents a model with Ridge regression appliedNote how much smoother the red line is.

A Tour Of Machine Learning Algorithms

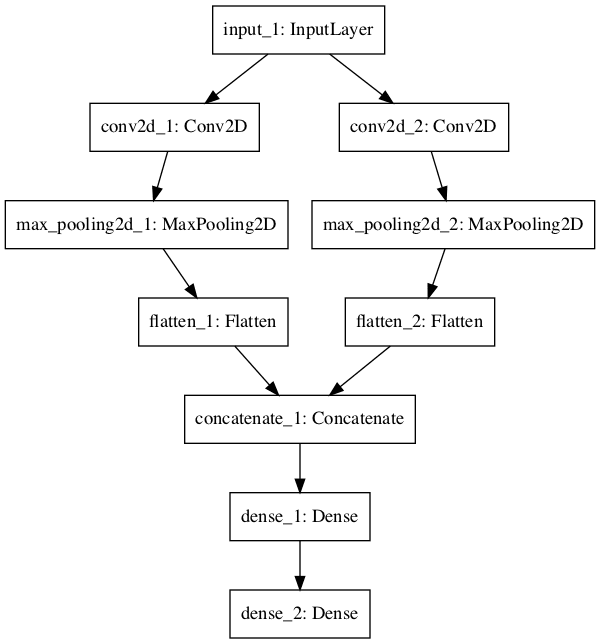

How To Use The Keras Functional Api For Deep Learning

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

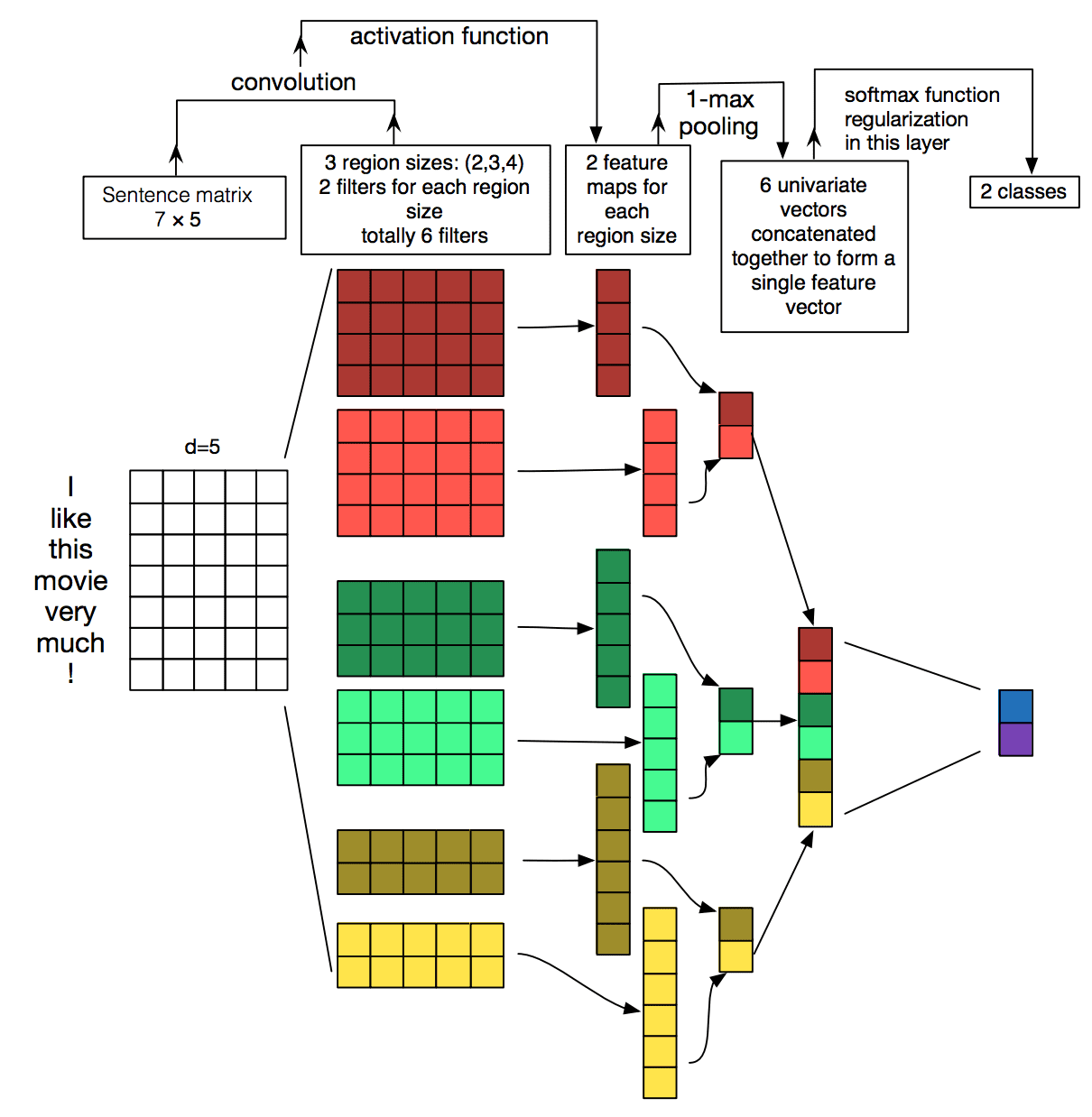

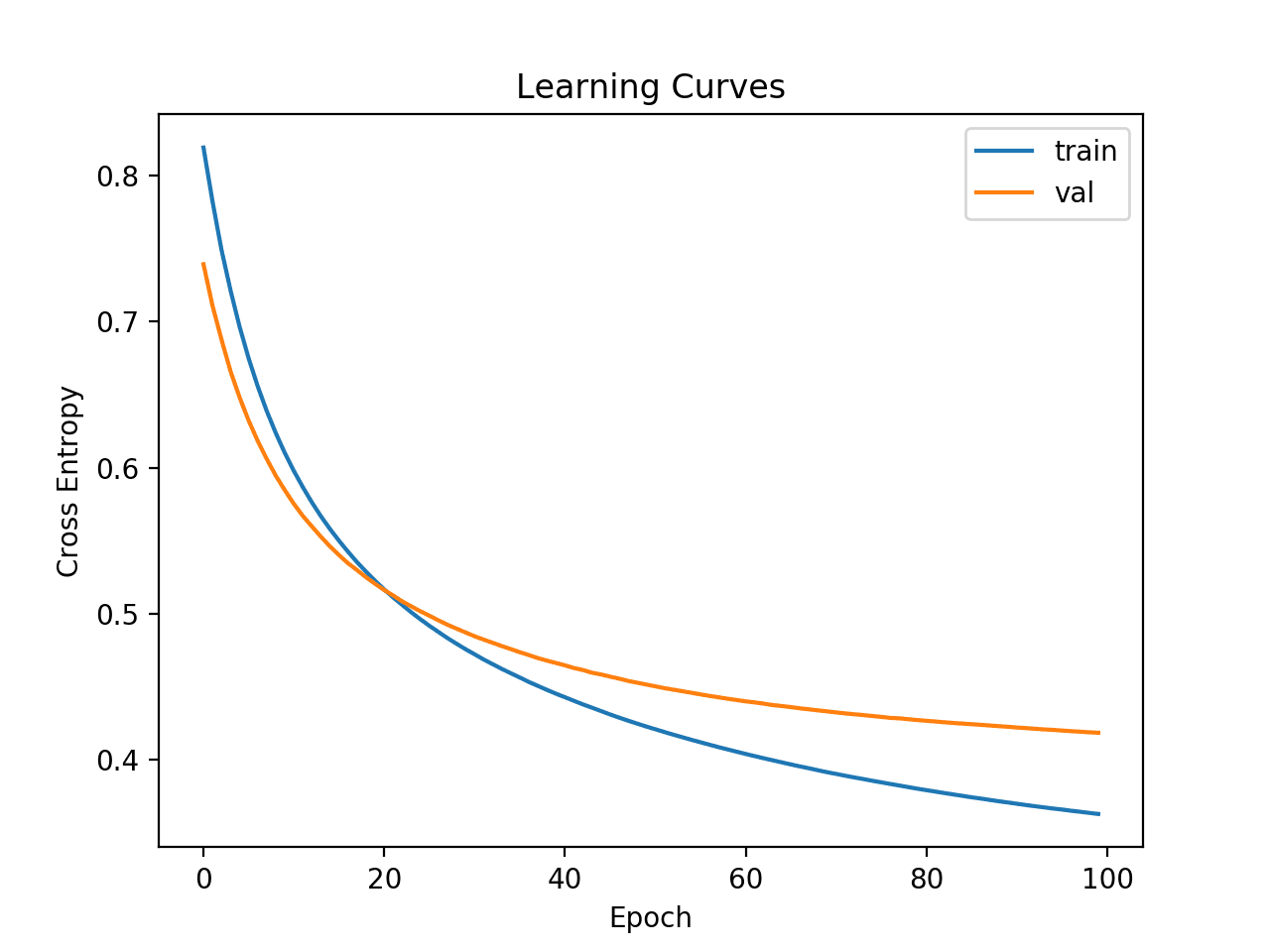

Best Practices For Text Classification With Deep Learning

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

A Gentle Introduction To The Rectified Linear Unit Relu

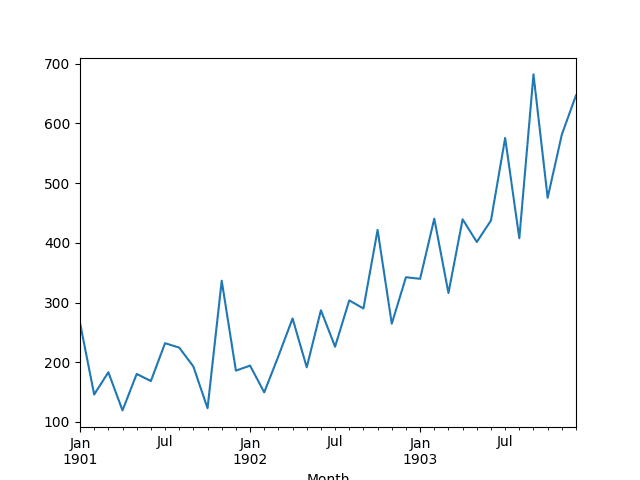

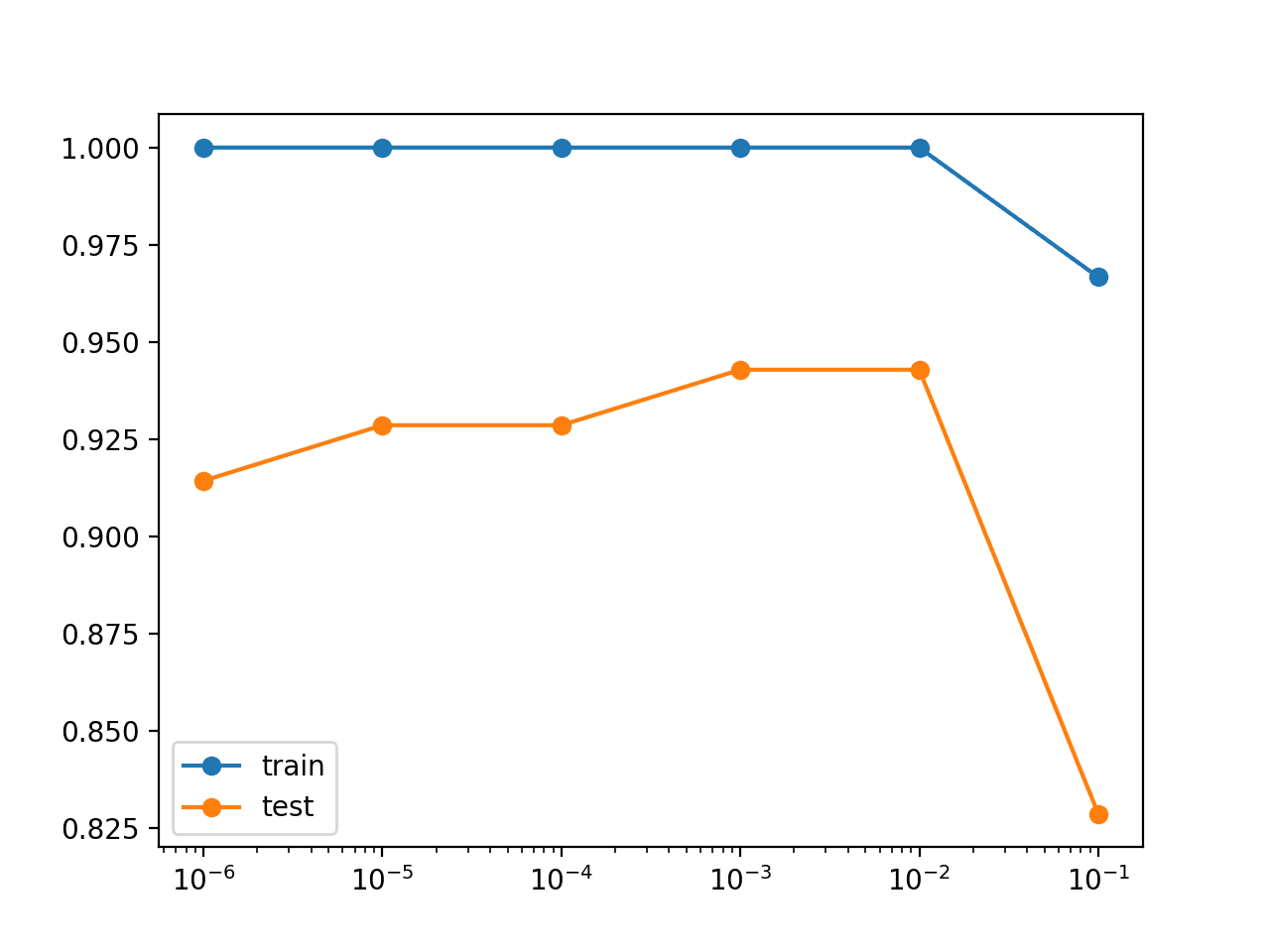

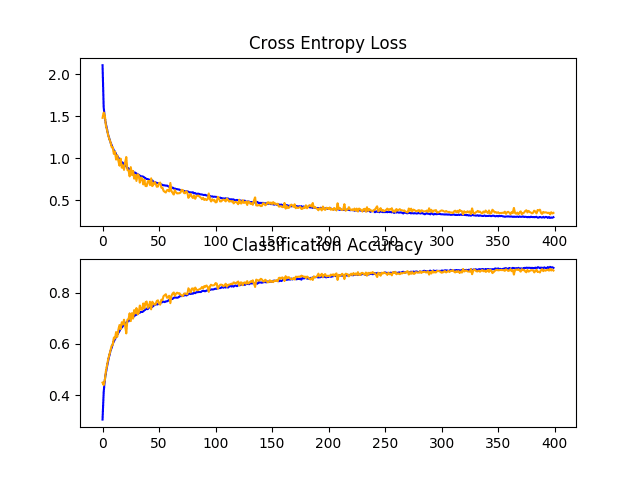

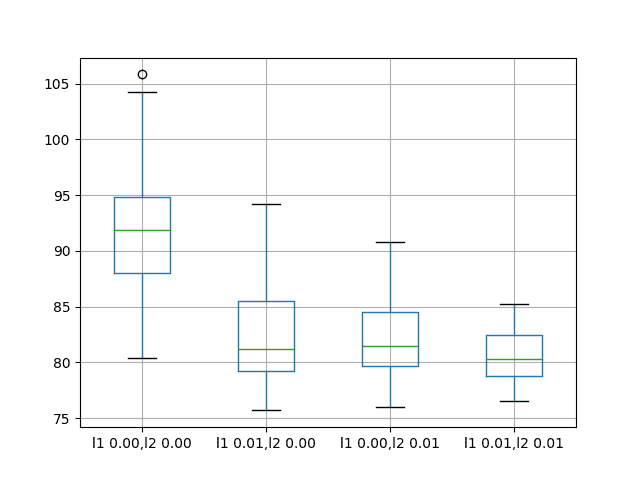

Weight Regularization With Lstm Networks For Time Series Forecasting

Weight Regularization With Lstm Networks For Time Series Forecasting

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

Linear Regression For Machine Learning

Issue 4 Out Of The Box Ai Ready The Ai Verticalization Revue

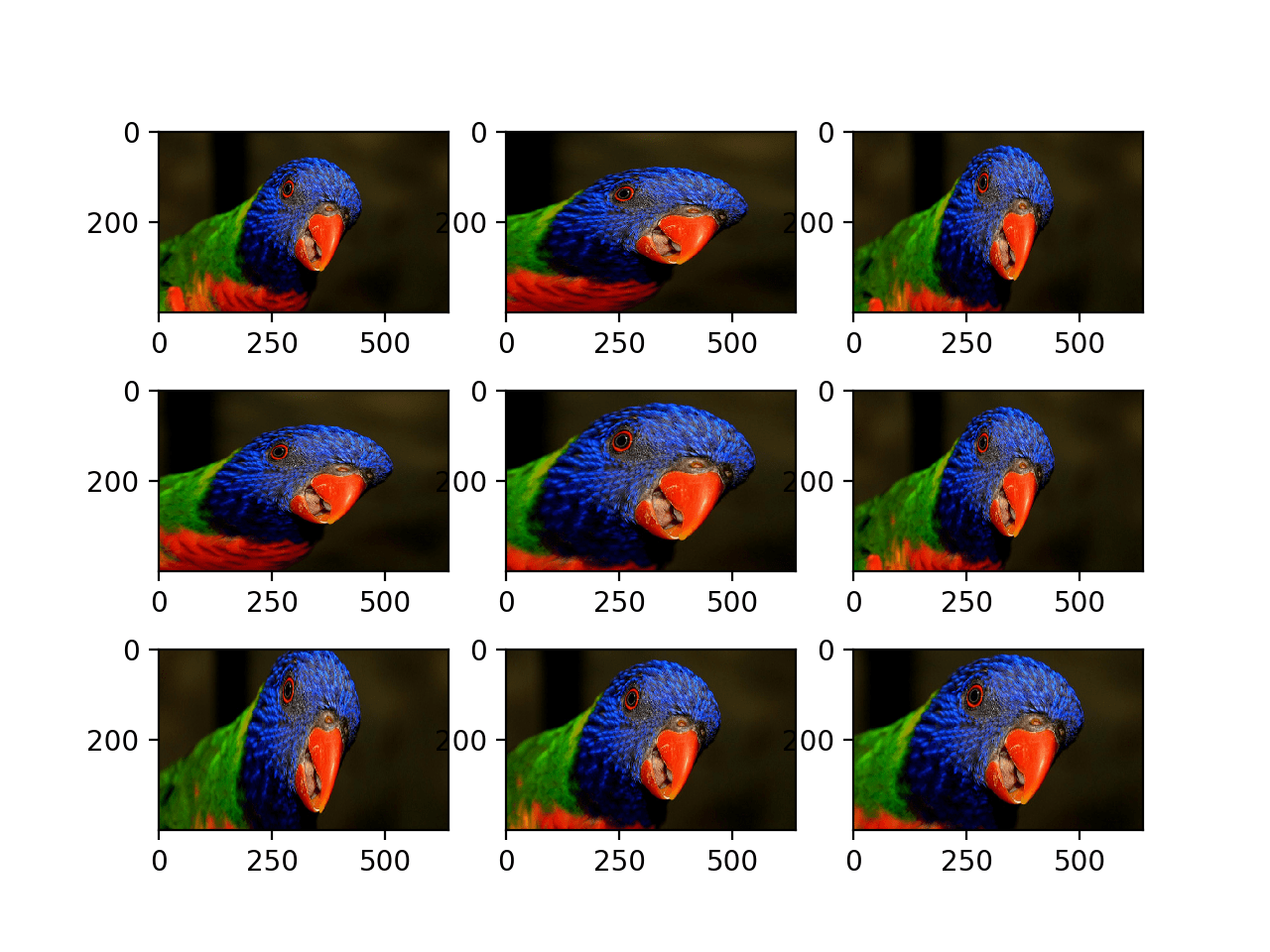

How To Configure Image Data Augmentation In Keras

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

A Tour Of Machine Learning Algorithms

![]()

Start Here With Machine Learning

Weight Regularization With Lstm Networks For Time Series Forecasting